In Cloud Consulting

The Data Center is the New Unit of Computing (NVIDIA GTC 2021 Keynote Part 3) - read the full article about cloud technology 2021, Cloud Consulting and Data migration, Cloud infrastructure management from NVIDIA on Qualified.One

Youtube Blogger

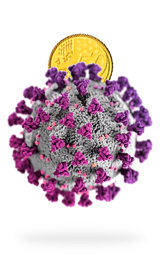

[Music] data center is the new unit of computing cloud computing and ai are driving fundamental changes in the architecture of data centers traditionally enterprise data centers ran monolithic software packages virtualization started the trend towards software-defined data centers allowing applications to move about and letting it manage from a single pane of glass with virtualization the compute networking storage and security functions are emulated in software running on the cpu though easier to manage the added cpu load reduced the data centers capacity to run applications which is its primary purpose this illustration shows the added cpu load in the gold color part of the stack cloud computing re-architect the data centers again now to provision services for billions of consumers monolithic applications were disaggregated into smaller microservices that can take advantage of any idle resource equally important multiple engineering teams can work concurrently using ci cd methods data center networks became swamped by east-west traffic generated by disaggregated microservices csps tackled this with melanoxs high-speed low-latency networking then deep learning emerged magical internet services were rolled out attracting more customers and better engagement than ever deep learning is compute intensive which drove adoption of gpus nearly overnight consumer ai services became the biggest users of gpu supercomputing technologies meanwhile the mountain of infrastructure software continues to grow now adding zero trust security initiatives makes infrastructure software processing one of the largest workloads in the data center the applications and services should be the answer is a new type of chip for data center infrastructure processing like nvidias bluefield dpu let me illustrate this with our own cloud gaming service geforce now as an example geforce now is nvidias geforce in the cloud service geforce now serves 10 million members in 70 countries two years ago geforce now only had just one million members incredible growth gamers are playing concurrently on geforce now servers located in data centers hundreds of miles away geforce now is a seriously hard consumer service to deliver everything matters speed of light visual quality frame rate response smoothness startup time server cost and most important of all security currently geforce now uses nvidias virtual gpu technology the virtualization networking storage and security is all done in software the load is significant were transitioning geforce now to bluefield with bluefield we can isolate the infrastructure from the game instances and offload and accelerate the networking storage and security the geforce now infrastructure is costly with bluefield we will improve our quality of service and concur users at the same time the roi of bluefield is excellent doka is our sdk to program bluefield docus simplifies application offload to bluefields accelerators and programmable engines every generation of bluefield will support doka from now on so todays applications and infrastructure will get even faster when the next bluefield arrives im thrilled to announce our first data center infrastructure sdk doka 1.0 is available today theres all kinds of great technology inside deep packet inspection secure boot tls crypto offload regular expression acceleration and a very exciting capability a hardware-based real-time clock that can be used for synchronous data centers 5g and video broadcast we have great partners working with us to optimize leading platforms on bluefield infrastructure software providers edge and cdn providers cyber security solutions and storage providers basically the worlds leading companies in data center infrastructure we will accelerate it all with bluefield though were just getting started with bluefield 2.

today were announcing bluefield 3 22 billion transistors the first 400 gigabit per second networking chip 16 armed cpus to run the entire virtualization software stack for instance running vmware esx bluefield 3 takes security to a whole new level fully offloading accelerating ipsec and tls cryptography secret key management and regular expression processing whereas bluefield 2 offload an equivalent of 30 cpu cores it would take 300 cpu cores to secure offload and accelerate the network traffic at 400 gigabits per second a 10x leap in performance were on a pace to introduce a new blue field generation every 18 months bluefield 3 will do 400 gigabits per second and be 10x the processing capability of bluefield 2 and bluefield 4 will do 800 gigabits per second and add nvidias ai computing technologies to get another 10x boost 100x in three years and all of it will be needed a simple way to think about this is that a third of the roughly 30 million data center servers shipped each year are consumed running the software defined data center stack this workload is increasing much faster than moores law we know this because the amount of data were producing and moving around so unless we offload and accelerate this workload data centers will have fewer and fewer cpus to run applications the time for bluefield has come

NVIDIA: The Data Center is the New Unit of Computing (NVIDIA GTC 2021 Keynote Part 3) - Cloud Consulting