In Data Science

Buckle up and tune in for a time-travel journey deep into the last 20 years, as our story begins in 2003 in a small town south of San Francisco...

Management

Big Data, and the Hadoop ecosystem in particular, emerged a little over 15 years ago and has evolved today in ways few would have guessed at the time.

When it first appeared, the open-source Hadoop immediately became a popular tool for storing and managing petabytes of data. A vast and vibrant ecosystem with hundreds of projects has formed around it, and it is still used by many large companies, even against the backdrop of modern cloud platforms. In the current article I will describe all these 15 years [1] of evolution of the Hadoop ecosystem, talk about its growth over the last decade, and the latest steps in the development of the big data field in recent years.

2003-2006: the beginning

Appeared in 2003: iTunes, Android, Steam, Skype, Tesla. Also appeared in 2004: The Facebook, Gmail, Ubuntu, World of Warcraft. Introduced in 2005: YouTube, Reddit. Occurred in 2006: Twitter, Blu-ray, Waze, Oblivion.

It all started in the early years of the new millennium, when an already-growing start-up in Mountain View called Google was trying to index the entire growing Internet. They faced two major challenges that no one had solved before:

- How to put hundreds of terabytes of data on thousands of disks installed in more than a thousand machines, with no down times, no loss of information, and no permanent availability?

- How can you parallelize computation in an efficient and fault-tolerant manner to handle all this data across all the machines?

To better understand the complexity of such an undertaking, imagine a cluster with thousands of machines, with at least one machine always under maintenance due to failures [2].

Between 2003 and 2006 Google produced three research papers explaining the internal architecture of data. These papers changed the Big Data industry forever. The first one was published in 2003 under the title "The Google File System". The second followed in 2004 with "MapReduce: Simplified Data Processing on Large Clusters". According to Google Scholar, it has since been cited more than 21,000 times. A third paper was published in 2006 under the title Bigtable: A Distributed Storage System for Structured Data.

Even though these works had a decisive impact on the emergence of Hadoop, Google itself had nothing to do with it because it kept its source code private. But there's a very interesting story behind it all, and if you haven't heard of Jeff Dean and Sanjay Gemawat, you should definitely read this article from the New Yorker.

Meanwhile, the founder of Hadoop, Yahoo! employee Doug Cutting, who had already developed Apache Lucene (the search library underlying Apache Solr and ElasticSearch), was working on a highly distributed search module called Apache Nutch. Like Google, this project needed distributed storage and serious computing power to achieve widespread scale. After reading Google's work on Google File System and MapReduce, Doug realised the fallacy of his current approach, and the architecture described in those papers inspired him to create in 2005 a daughter project for Nutch, which he named Hadoop after his son's toy (yellow elephant).

This project started with two key components: the Hadoop Distributed File System (HDFS) and implementation of the MapReduce framework. Unlike Google, Yahoo! decided to open source the project within the Apache Software Foundation. By doing so, they invited all the other leading companies to use it and participate in its development in order to bridge the technological gap with their neighbours (Yahoo! is based in Sunnyvale near Mountain View). As we shall see next, the next few years have exceeded all expectations. Naturally, Google has achieved a lot during this time as well.

2007-2008: the first co-hosts and users of Hadoop

Appeared in 2007: iPhone, Fitbit, Portal, Mass Effect, Bioshock, The Witcher. Introduced in 2008: Apple App Store, Android Market, Dropbox, Airbnb, Spotify, Google Chrome.

Pretty soon other companies started using Hadoop and encountered similar problems with processing large amounts of data. At the time, this meant a huge commitment, as they needed to organize and manage clusters of machines themselves, and writing a MapReduce task clearly didn't seem like an easy walk. Yahoo!'s attempt to reduce the complexity of programming these tasks came in the form of Apache Pig, an ETL tool capable of translating Pig Latin's own language into MapReduce steps. However, others soon joined in the development of this new ecosystem.

In 2007, the young, fast-growing company Facebook, led by 23-year-old Mark Zuckerberg, released two new projects to the public under the Apache license: Apache Hive and, a year later, Apache Cassandra. Apache Hive is a framework capable of converting SQL queries into MapReduce tasks for Hadoop. Cassandra, meanwhile, is an extensive columnar repository designed for large-scale distributed content access and updating. This repository did not require Hadoop for its operation, but soon became part of this ecosystem when connectors for MapReduce were created.

At the same time, Powerset, a lesser-known search engine company, was inspired by Google's work on Bigtable and developed Apache Hbase, another columnar repository based on HDFS. Soon after, Powerset was taken over by Microsoft, which launched a new project based on it, called Bing.

Among other things, the rapid adoption of Hadoop was decisively influenced by another company, Amazon. By launching Amazon Web Services, the first on-demand cloud platform, and quickly adding support for MapReduce via Elastic MapReduce, it enabled startups to conveniently store their data in S3, a distributed file system, and deploy and run MapReduce tasks in it, eliminating unnecessary fiddling with a Hadoop cluster.

2008-2012: growth of Hadoop vendors

Emerged in 2009: Bitcoin, Whatsapp, Kickstarter, Uber, USB 3.0. Emerged in 2010: iPad, Kindle, Instagram. Appeared in 2011: Stripe, Twitch, Docker, Minecraft, Skyrim, Chromebook.

The main pain in using Hadoop was the incredible effort required to set up, monitor and maintain the cluster. The first Hadoop services vendor, called Cloudera, emerged in 2008 and was soon bought out by Doug Cutting. Cloudera offered an off-the-shelf Hadoop distribution called CDH, as well as an interface for cluster monitoring called Cloudera Manager, which finally made it easier to install and manage clusters and related software like Hive and HBase. Hortonworks and MapR were soon founded with the same goal in mind. Cassandra also got its own vendor Datastax, which emerged in 2010.

After a while, everyone in the market agreed that while Hive was convenient as a SQL tool for managing huge ETL packages, it was ill-suited for interactive and business intelligence (BI). Anyone accustomed to standard SQL-based databases expects them to be able to scan tables with thousands of rows in a matter of milliseconds. Hive, on the other hand, required a minute for this operation (which is what happens when you ask an elephant to do the mouse work).

This was the beginning of the SQL war, which has not subsided to this day (although we'll see later on that other players have entered the arena since). Once again, Google indirectly made a decisive impact on the world of big data by releasing a fourth research paper in 2010 entitled "Dremel: Interactive Analysis of Web-Scale Datasets". It described two major innovations:

- The distributed interactive query architecture that inspired most of the interactive SQL tools discussed below.

- The form of columnar storage that underpinned several new storage formats, such as Apache Parquet, co-developed by Cloudera and Twitter, and Apache ORC, released by Hortonworks with Facebook.

Inspired by the work on Dremel, Cloudera, in an effort to address high latency in Hive and break away from the competition, decided in 2012 to launch a new open source SQL engine for interactive queries called Apache Impala. In parallel, MapR launched its own open-source interactive engine called Apache Drill. But Hortonworks management, instead of building a new engine from scratch, chose to speed up Hive by launching Apache Tez project, a kind of version 2 for MapReduce, and adapting Hive to run Tez instead of MapReduce. There were two reasons for this decision: firstly, the company had less staff resources than Cloudera, and secondly, most of its customers were already using Hive and would prefer to speed it up rather than switch to a different SQL engine. As we'll find out later, many other distributed engines soon emerged, and the new slogan was "Everything is faster than Hive".

2010-2014: Hadoop 2.0 and the Spark revolution

Emerged in 2012: UHDTV, Pinterest, Facebook reaches 1 billion active users, Gagnam Style video reaches 1 billion views on Youtube. Emerging in 2013: Edward Snowden leaks NSA files, React, Chromecast, Google Glass, Telegram, Slack.

While Hadoop was consolidating its position and was busy implementing a new core YARN resource management component, which had previously been awkwardly handled by MapReduce, a minor revolution began with the sudden rise in popularity of Apache Spark. It was apparent that Spark would prove to be a great replacement for MapReduce, due to its greater capabilities, simpler syntax and high performance, which was due, in part, to its ability to cache data in RAM. The only weakness compared to MapReduce at first was the instability of Spark, but this problem was resolved as the product evolved.

It also had high interoperability with Hive, because SparkSQL was based on Hive syntax (in fact, the original Spark developers borrowed the lexer and parser from Hive), which made it easy to switch from Hive to SparkSQL. On top of that, Spark attracted a lot of attention in the machine learning world, as previous attempts to write MO algorithms via MapReduce, such as Apache Mahout, were clearly losing out to Spark implementations.

To support and monetise Spark's rapid growth, its creators launched Databricks in 2013. The goal of this project was to enable everyone to process huge amounts of data. To do this, the platform implemented simple and efficient APIs in many languages (Java, Scala, Python, R, SQL and even .NET), and native connectors for many data sources and formats (csv, json, parquet, jdbc, avro, etc.). What is interesting here is that Databricks' market strategy differed from that of its predecessors.

Instead of offering an on-premises deployment of Spark, the company took the position of a cloud-only platform, initially integrating with AWS (which was the most popular cloud at the time) and then with Azure and GCP. Nine years later, it's safe to say it was a smart move.

Meanwhile, new open source projects like Apache Kafka, a distributed messaging queue developed by LinkedIn, and Apache Storm [3], Twitter's distributed streaming computing engine, were emerging to handle streaming events. Both tools were released in 2011. In the same period, the Amazon Web Services platform achieved unprecedented popularity and success: this can be demonstrated by the Netflix surge in 2010 alone, made possible primarily by the Amazon cloud. Competition in the cloud sphere began to emerge. First Microsoft Azure emerged in 2010, followed by Google Cloud Platform (GCP) in 2011.

2014-2016: reaching a climax

Launched in 2014: Terraform, Gitlab, Hearthstone. Started in 2015: Alphabet, Discord, Visual Studio Code.

Since then, the number of projects that were part of the Hadoop ecosystem has continued to grow exponentially. Most of them started to be developed before 2014, and some came out under an open source license before that time as well. Some projects even began to cause confusion as a point was reached where multiple software solutions already existed for every need.

Higher-level projects like Apache Apex (closed) or Apache Beam (mainly promoted by Google) also started to appear, aiming at providing a unified interface for handling batch and streaming processes on top of various distributed backends like Apache Spark, Apache Flink or Google DataFlow.

It might also be mentioned that good opsource schedulers have finally started to hit the market - thanks to Airbnb and Spotify. The use of a scheduler is usually tied to the business logic of a corporation, and this software is written quite naturally without much complexity, at least at first. Then you do realise that it's very difficult to keep it simple and easy to understand for others. This is why virtually all major technology companies have written their own, and sometimes open source, products: Yahoo! - Apache Oozie, LinkedIn - Azkaban, Pinterest - Pinball (closed) and many others.

However, none of these solutions were ever found to be the best, with most companies sticking to their own. Fortunately, sometime in 2015, Airbnb launched the open source project Apache Airflow and Spotify launched Luigi [4]. These were two schedulers that quickly gained interest and were adopted by many companies. In particular, Airflow is now available in SaaS mode on Google Cloud Platform and Amazon Web Services.

On the SQL side, too, several distributed data warehouses have emerged, aiming to provide faster query processing capabilities compared to Apache Hive. We've already talked about Spark SQL and Impala, but it's also worth mentioning Presto, an open source project launched by Facebook in 2013. In 2016, Amazon renamed this project Athena as part of its SaaS offering, and when its initial developers left Facebook, they made a separate fork called Trino.

At the same time, several proprietary distributed storage solutions for analytics also emerged, which could include Google BigQuery (2011), Amazon Redshift (2012) and Snowflake (2012).

A complete list of all the projects listed as part of the Hadoop ecosystem can be found on this page.

2016-2020: Hadoop is to be replaced by containerisation and deep learning

Appeared in 2016: Occulus Rift, Airpods, Tiktok. Appeared in 2017: Microsoft Teams, Fortnite. Appeared in 2018: GDPR, Cambridge Analytica scandal, Among Us. Appeared in 2019: Disney+, Samsung Galaxy Fold, Google Stadia

Over the next few years, there was a general acceleration and interconnectedness. It's hard to account for all the new technologies and companies in the big data space, so I've decided to talk about just four major trends that I think have had the maximum impact.

The first trend was the massive move of data infrastructures to the cloud, with HDFS being replaced by cloud storage like Amazon S3, Google Storage and Azure Blob Storage.

The second trend was containerisation. You have probably heard of Docker and Kubernetes. Docker is a containerization framework that came out in 2011 and started to gain popularity dramatically in 2013. In June 2014, Google released an open source container orchestration tool called Kubernetes (aka K8s), which many companies immediately adopted to build new distributed/scalable architectures. Docker and Kubernetes made it possible to deploy new kinds of such architectures, already more stable, robust, and suitable for many cases, including real-time event-driven transformations. Hadoop took time to catch up with Docker, and support for running containers in it only appeared in version 3.0 in 2018.

The third trend, as mentioned above, is the emergence of fully managed, parallelised storage for SQL-based analytics. This point is well demonstrated by the formation of the Modern Datastack and the release of the dbt command line tool in 2016.

Finally, the fourth trend that influenced Hadoop was the development of deep learning. In the second half of the 2010s, everyone had already heard about deep learning and artificial intelligence. AlphaGo marked a landmark moment by beating Ke Gi, the world champion of Go, in the same way that IBM's Deep Blue had beaten the chess champion, Yuri Kasparov, twenty years earlier. This technological leap, which was already working wonders and promising even more - such as autonomous cars - often involved big data, as AI models required huge amounts of information processing for their training. However, Hadoop and AI were too different and it was difficult to make the technologies work together. In fact, deep learning indicated the need for new approaches for big data processing and confirmed that Hadoop is not a universal tool.

To put it in a nutshell: data scientists working with deep learning needed two things that the Hadoop ecosystem could not provide. First, they needed GPUs, which were usually not available in Hadoop cluster nodes.

Second, scientists needed the latest versions of deep learning libraries, such as TensorFlow and Keras, which were problematic to install on the whole cluster, especially when many users asked for different versions of the same library. This particular problem was perfectly solved by Docker, but integrating this tool into Hadoop took time, and data analysts were not prepared to wait. Because of this, they usually preferred to run one powerful virtual machine with 8 GPUs instead of using a cluster.

That's why when Cloudera made its initial public offering (IPO) in 2017, the company was already developing and distributing its newest product, Data Science Workbench. This solution was no longer based on Hadoop or YARN, but on containerisation with Docker and Kubernetes, allowing data scientists to deploy models in their own environment as a containerised application without risk to security or stability.

But it wasn't enough to return market success. In October 2018, Hortonworks and Cloudera underwent a merger and only the Cloudera brand remained. In 2019 MapR was bought by Hewlett Packard Enterprise (HPE) and in October 2021 private equity firm CD&R acquired Cloudera for less than its original share price.

Although the demise of Hadoop does not mean the complete demise of the entire ecosystem, as many large companies are still using it, especially for on-premises deployments. Many solutions built around the technology or parts of it are also using it. Innovations are also being introduced, such as Apache Hudi, released by Uber in 2016, Apache Iceberg, launched by Netflix in 2017, and the open source product Delta Lake, which Databricks developers introduced in 2019.

Interestingly, one of the main goals of these new storage formats was to circumvent the implications of the first trend described. Hive and Spark were originally created for HDFS, and some of the performance elements guaranteed by this file system were lost when moving to cloud storage like S3, resulting in a drop in efficiency. But I won't go into detail here, as that would require a whole article.

2020-2023: Modernity

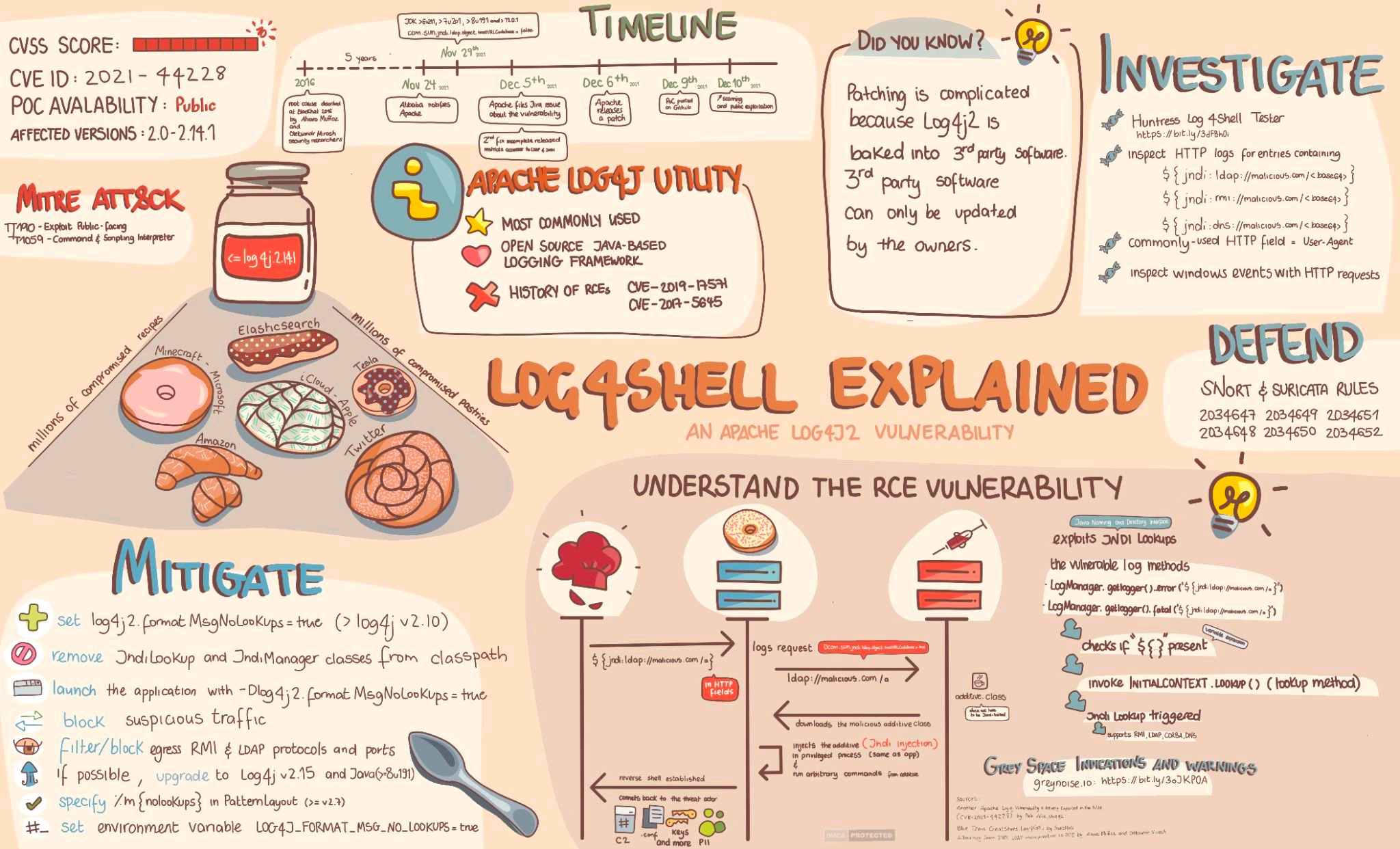

Appeared in 2020: COVID-19 pandemic. Appeared in 2021: Log4Shell vulnerability, Meta, Dall-E. Introduced in 2022: Midjourney, Stable Diffusion.

Hadoop cloud deployments have now largely been replaced by Apache Spark or Apache Beam [5] applications (mostly on GCP) in favour of Databricks, Amazon Elastic Map Reduce (EMR), Google Dataproc/Dataflow or Azure Synapse. I've also seen many young companies looking to use the "Modern Data Stack" built around storage for analytics such as BigQuery, Databricks-SQL, Athena or Snowflake, interfaced with code-free (or low-code) data delivery tools and organised using dbt, eliminating the need for distributed computing solutions like Spark.

Naturally, companies that still prefer to deploy their projects locally continue to use Hadoop and other open source projects like Spark and Presto. But every year the proportion of data moving to the cloud increases, and I see no reason for this trend to change.

As the data industry has evolved, more and more management and cataloguing tools have appeared. In this regard, it is worth mentioning opshoring solutions like Apache Atlas, released by Hortonworks in 2015, Amundsen, launched by Lyft in 2019, and DataHub, which LinkedIn launched in 2020. A number of closed start-ups have also sprung up in this segment.

New companies have also been built around the latest scheduler implementation technologies. Prefect, Dagster and Flyte, which launched their open repositories in 2017, 2018 and 2019 respectively and are now already challenging Airflow's reigning supremacy, can be named here.

Finally, the Lakehouse concept has begun to take shape. Lakehouse is a platform that combines the advantages of a data lake and a data repository [6]. It enables data scientists and business analysts to work within a single platform, simplifying management, sharing information, and improving security. Databricks was the most active in this segment because of Spark's compatibility with both SQL and DataFrames. It was followed by Snowflake's Snowpark offering, then Microsoft's Azure Synapse, and last to go was Google, which launched BigLake. Looking towards opsors, however, Apache Dremio has been offering a similar platform since 2017.

2023: Who will predict the near future?

Since the beginning of Big Data's history, the number of open source projects and startups has only continued to grow year after year (just estimate the scale of the industry in 2021). I remember around 2012 there were predictions that the new SQL wars would end and the true winners would be announced. So far this has not happened and it is difficult to predict how the situation will develop further. It will take more than a year for all the dust to settle. Although if we do try to speculate, I would predict the following:

- As others have said, the major data platforms (Databricks, Snowflake, BigQuery, Azure Synapse) will continue to improve and add new features to fill the gaps between each other. I expect to see more and more connectivity between components, including between data languages like SQL and Python.

- New projects and companies may slow down in the next couple of years, although that will be due more to a lack of funding after the next dot-com bubble bursts (if any) than to a lack of desire or ideas.

- From the outset, the most scarce resource has been qualified personnel. This means that for most companies [7] it is easier to invest extra money to solve performance problems or move to more cost-effective products than to spend extra time optimising. This is especially true now that the cost of distributed storage services has become so low. But perhaps at some point vendors will find it difficult to continue price competition by dumping, and prices will go up. Even so, the volume of data retained by businesses continues to grow year on year and with it the associated financial losses due to inefficiencies. Maybe one day a trend will emerge where people start looking for new, cheaper, opt-in alternatives, leading to a revival of a new round of Hadoop technologies.

- In the long term, however, in my opinion, the real winners will be the cloud providers Google, Amazon and Microsoft. All they have to do is wait and assess which direction the wind is blowing, and then at the right time acquire (or simply recreate) the most optimal technology. Every tool integrated into their cloud makes users' lives much easier, especially when it comes to security, governance, access control and cost management. And provided there are no serious organisational errors in the process, I see no real competition for these companies.

Conclusion

I hope you enjoyed this brief excursus into the history of big data and that it helped you better understand or remember where and how it all started. I've tried to make this article understandable to everyone, including non-technical people, so feel free to share it with colleagues who might find it interesting.

In conclusion, there is no way human knowledge and technology in AI and big data could have progressed so quickly without the magical power of open source and information sharing. We should be grateful to the founders of Google, who originally shared their expertise through research papers, and to all the companies that have disclosed the source code of their projects. Open and free (or at least cheap) access to technology has been the greatest driver of innovation in the internet over the past 20 years. The real boom in software development began in the 1980s, when people could afford home computers. The same could be said about 3D printing, which existed for decades and only started to gain momentum in the 2000s with the advent of self-replicating machines, or the release of the Raspberry Pi single board computers that spurred the DIY movement.

Open and easy access to knowledge should always be encouraged and defended, even more so than it is now. The war over these principles never falters. And one of its most important battles is unfolding today in the field of AI. Big companies are making their mark on opsorsors (Google introduced TensorFlow, for example), but they have also learned to use open source software like venus flycatchers, luring users into their proprietary systems and leaving the most important (and difficult to recreate) functionality under the protection of patents.

It is vital for all humanity and the global economy that we support open source projects and knowledge sharing (like Wikipedia) with all our might. Governments, their people, companies and most investors need to understand this: growth can be driven by innovation, but innovation, in turn, is fueled by sharing knowledge and technology with the masses.

Footnotes

1. It's even 20 years if you count Google's prehistory. Hence the title of the article.

2. In 2022 we may have made enough progress in hardware reliability to not have to worry so much about such a nuance, but 20 years ago it was definitely a serious inconvenience.

3. In 2016 Twitter released Apache Storm replacement Apache Heron (still in the Apache incubation stage).

4. In 2022 Spotify developers decided to stop using Luigi and switched to Flyte.

5. I suspect that Apache Beam is used mainly on GCP with DataFlow.

6. As Databricks presents it, Lakehouse combines the flexibility, high cost-effectiveness and scale of data lakes with data management and ACID storage transactions.

7. Naturally, I'm not talking here about companies the size of Netflix or Uber.