In Translation and proofreading

1 5 Byte Pair Encoding - read the full article about machine translation, Translation and proofreading and from From Languages to Information on Qualified.One

Youtube Blogger

In this lecture, we introduce the Byte Pair Encoding, or BPE algorithm, which uses corpus statistics to decide how to segment a text into tokens.

Instead of just breaking up words at every whitespace, or as we saw for Chinese, breaking up words at every character, the algorithm were going to introduce now uses the data to tell us how to tokenize.

And this family of algorithms is often called subway tokenization because the tokens can be parts of words as well as whole words.

There are three common subword tokenization, algorithms, byte pair encoding, or BPE, which well talk about now.

An algorithm often called Unigram, for Unigram language model tokenization and the wordpiece algorithm.

These algorithms all have two parts. First, a token learner that takes a training corpus and induces a vocabulary set of tokens.

That is the vocabulary that tokenizer will try to map things into.

And then the second part is a token segmenter. That takes a test sentence and tokenizes it according to the vocabulary it learned from the training corpus. So lets start by talking about the first part, the token learner.

Lets imagine that we start with a vocabulary that is the set of all individual characters.

So maybe we have all the capital letters and all the lowercase letters. Thats our vocabulary.

And were going to repeat the following K times.

We first choose the two symbols that are most frequently adjacent in the training corpus, two letters that occur most often next to each other.

Lets just say that these are A and B, and now were gonna do is were gonna add a new symbol, the merged symbol AB to the vocabulary, and were going to replace the entire training corpus every adjacent A and B with this new symbol, AB.

And were gonna do this until K mergers have been done, and K is a parameter of the algorithm.

We can see this more formally here. So we begin with a vocabulary V.

All the unique characters in the Corpus and then K Times (and the string Corpus C and the number of merges K are parameters to the algorithm), K times were going to choose the two most frequent adjacent tokens.

Well make a new token with their concatenation. Well add that to the vocabulary.

And now we just replace all the individual pairs of those two tokens in the corpus with this new token. And then when were done this K times, we return the new learned vocabulary.

Now, there is an addendum to the BPE algorithm, which is that in practice, most subword algorithms are run inside space, separated tokens.

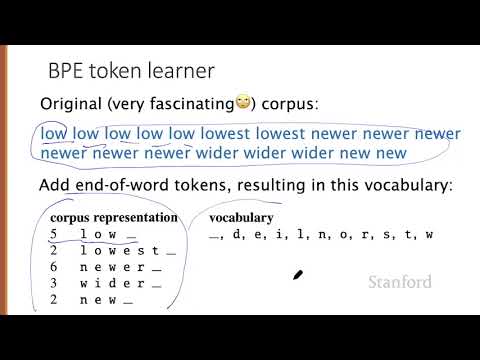

So we commonly first add a special end of tokens symbol and Ill use underbar to represent that before each space in the training corpus before we separate into letters. So lets walk through an example. Imagine the following corpus.

Low. Low. Low. Low. Low. Lowest. Lowest. Newer newer.

Newer. Newer. Newer. Newer. Wider. Wider. Wider. New new.

And lets take that literarily fascinating corpus and add a little underbar for an end of word token before every space.

And the result is going to be this vocabulary. We have each of the letters in the original Corpus D E I L N O R S T W.

And the new token, the underbar and for convenience, Im going to represent the corpus in the following way, which is Im going to use counts of different strings of letters followed by under Barbours, rather than copying this entire long corpus representation every time.

Im just going to use this. So the word- the sequence l o w underbar occurs five times.

There it is. One, two, three, four, five and so on. I havent written the underbars up here.

So were gonna refer to the corpus this way and the vocabulary this way.

Lets walk through the algorithm, seeing how we change the corpus and augment the vocabulary.

So were going to begin with the corpus as we started with it and our original vocabulary, and were going to first say which two letters are next to each other most often? And that is going to be an E and R next to each other six times in the context of N E W.

And three times in the context of W I.D. So thats nine times.

And were gonna merge the E next to R into a new symbol ER.

Were going to add ER to our vocabulary. Were gonna merge all the cases of E R in the corpus and now were gonna move on.

Whats the next most frequent pair? Its another thing that occurs nine times the new symbol, E.R. is now next to the underbar, six times here and three times there.

So its going to appear in the corpus a total of nine times. So were going to merge ER nextto under bar to a new symbol.

ER-underbar. We can add that symbol to our vocabulary and were gonna merge them in our corpus.

The most frequent next symbol is N next to E, occurs six times in this context, two times in that context for a total of eight times.

So were going to merge N nextto E to a new symbol, NE. So we add the new symbol NE and now we merge them in our corpus.

And we keep moving on in that way. Were next going to merge NE with W producing a new token, NEW, L with O, l o w.

So we have a token. LOW. And then NEW with e r underbar, we have a new token.

NER-underbar and LOW with an underbar.

So we have a token LOW-underbar. So now we have the following vocabulary that weve added a whole bunch of new words to.

So thats our training set.

Next, were going to take that set of merges weve learned in training and apply it to the segmenter algorithm running on our test set.

And were going to run those merges greedily in the order we learn them not based on frequency.

So remember, in our training set, we picked our merges based on how frequently letters occurred together in our test set.

Were not going to look at the test frequencies at all. Were just going to run our merges in the order we learned them in the training set.

So were just going to, for example, first, (because the first merge we did is merge E with R in the training set), in the test set.

were going to merge all the ERs to ER. And next, were going to merge all the ER- underbars to, E.R. under bars and so on.

And so the result is that the string n e w e r underbar would be tokenized as a full word, because if we look back at our vocabulary there it is.

NEWER under bar is a word in our vocabulary.

But if we see the string l o w e r under bar, thats going to be tokenized.

Thats two separate tokens low and ER underbar because again, looking back at our vocabulary, there is no token L OW E R underbar.

Theres a token lo- lo, sorry, theres a token low and theres a token ER underbar.

So itll, itll separate the words into those two tokens. Now, the resulting BP tokens will occur, words that occurred frequently in the corpus, so frequent words are going to be tokenized, and also frequent sub words.

And often those frequent sub words are morphemes like E S T or e r. A morpheme is the smallest meaning bearing unit of a language.

So, for example, the word unlikeliest has the three morphemes un-, likely- and -est, and often these BPE tokens turn out to correspond to morphemes, although not always.

The Byte Pair Encoding algorithm weve described is one of a set of corpus based tokenizers that are extremely widely used throughout natural language processing.

From Languages to Information: 1 5 Byte Pair Encoding - Translation and proofreading